By Hugo Salgado, Research and Development at NIC Chile; Dario Gomez Technology Consultant; Alejandro Acosta R+D Coordinator at LACNIC

Introduction

In this article we would like to talk about some recent studies we have conducted on a topic we are very passionate about: DNSSEC. Note that we are using the plural term “studies”, as there are two studies on DNSSEC that we began at the same time… please continue reading to find out what these two studies are about!

About DNSSEC

DNSSEC incorporates additional security to the DNS protocol, as it allows checking the integrity and authenticity of the data, preventing spoofing and tampering attacks through the use of asymmetric cryptography, better known as public/private key cryptography. By using these keys and digital signatures based on public key cryptography, it is possible to determine whether a query has been altered, which allows guaranteeing the integrity and authenticity of the message. If these signatures are checked and they do not match, it means that the chain of trust has been broken and the query cannot be validated as legitimate.

Having DNSSEC depends on your ISP or Internet service provider, who is the one responsible for configuring the protocol. There are several different tools to find out if you have DNSSEC, such as the following:

https://dnssec-analyzer.verisignlabs.com/

https://dnsviz.net/

As many already know, the DNSSEC protocol has been growing a lot in recent years. Four aspects have marked the growth of DNSSEC deployment:

DNSSEC is enabled by default on some recursive servers (BIND).

Great progress has been achieved in making it easier for major registrars to enable DNSSEC on the different domains.

All major recursive DNS servers perform DNSSEC validation (Google Public DNS, Cloudflare, Quad9, etc.).

Apple very recently informed that iOS16 and macOS Ventura will allow DNSSEC validation at the stub resolver.

DNSSEC has always been very important for LACNIC, and we have organized many events and activities around this topic. However, to date we had never conducted our own study on the matter.

What is the study about?

LACNIC’s R&D department wanted to carry out a study to understand the status and progress of DNSSEC deployment in the region.

Data sources

We have two very reliable data sources:

We used RIPE ATLAS probes https://atlas.ripe.net/

We performed traffic captures (tcpdump) that were then anonymized on authoritative servers

Dates:

We began gathering data in November 2021. Currently, data is gathered automatically, and weekly and monthly reports are produced. [1]

How can one identify if a server is performing DNSSEC?

We need to look at two different things:

Atlas probes:

DNS resolution requests for a domain name that has intentional errors in its signatures and therefore cannot be validated with DNSSEC are sent to all available probes in Latin America and the Caribbean. An error response (SERVFAIL) means that the resolver used by that probe is using DNSSEC correctly. If, on the other hand, a response is obtained (NOERROR), the resolver is not performing any validation. Note that, interestingly, the goal is to obtain a negative response from the DNS server – this is the key to knowing whether the recursive server validates DNSSEC. Now, an example. If you visit dnssec-failed.org and can open the page, it means that your recursive DNS is not performing DNSSEC validation – you shouldn’t be able to open the page! :-)

Traffic captures (tcpdump):

Before sharing what we do with traffic captures, we will first expand a bit on the concept of DNSSEC. Just as DNS has traditional records (A, AAAA, MX, etc.), new records were added for DNSSEC: DS, RRSIG, NSEC, NSEC3, and DNSKEY. In other words, a recursive DNS server can query the AAAA record to learn the IPv6 address of a name and also the Delegation Signer (DS) record to check the authenticity of the child zones. The key to this study is that servers that don’t perform DNSSEC validation don’t query DNSSEC records!

Based on the above, when making the capture, tcpdump is asked to take the entire packet (flag -s 0). Thus, we have all its content, from Layer 3 to Layer 7. While processing the packet, we look for specific DNSSEC records (again: DS, RRSIG, NSEC, NSEC3, and DNSKEY). If we can obtain any of these records, then the recursive server is indeed performing DNSSEC validation, otherwise it is not.

Where is this capture performed?

The capture is performed specifically in one of the instances of the reverse DNS D server (D.IP6-SERVERS.ARPA). The following command is used: /usr/sbin/tcpdump -i $INTERFAZ -c $CANTIDAD -w findingdnssecresolvers-$TODAY.pcap -s 0 dst port $PORT and ( dst host $IP1 or dst host $IP2 )

Processing the data

First, processing the data comprises several steps, all of which are performed entirely using open-source software, specifically, Bash, Perl, and Python3 on Linux.

Second, let’s not forget that there are two sources of information: traffic captures (PCAPs) and Atlas probes. Below is the methodology followed in each case.

Processing of PCAPs: After obtaining the PCAPs, a series of steps are performed, including the following:

Processing of PCAP files in Python3 using the pyshark library.

Cleaning of unprocessable data (incorrectly formed, damaged, non-processable packets, etc.)

Elimination of duplicate addresses

Anonymization of the data

Generation of result.

Generation of charts and open data

Processing of the information obtained in RIPE Atlas:

Traffic data captures are performed with monthly measurements on the RIPE Atlas platform, using its command line API. They are then collected and processed using a series of Perl scripts and, finally, they are plotted using the Google Charts API. In addition, we always make the data available in open data format.

Let’s keep in mind that, in order to determine if a probe is using a validating resolver, it uses a domain name with intentionally incorrect signatures. Because the name is not valid according to DNSSEC, a validating probe should return an error when attempting to resolve that name. On the contrary, a positive response is obtained for the name, the resolver is not validating, as it has ignored the incorrect signature.

Results

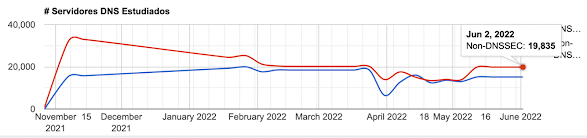

The chart below shows the number of servers that were studied, specifying those that use DNSSEC and those that do not. The blue and red lines represent servers for which DNSSEC is and is not enabled, respectively.

Nro de Servidores DNSs estudiados

Chart No. 1

As the image shows, on 2 June 2022 there were more recursive servers that did not perform DNSSEC validation than servers that did. Between 33,000 and 55,000 IP addresses were analyzed each week. In general, an average of approximately 55% of servers that do not use the protocol and 45% of positive samples is maintained.

Nro de Servidores IPv6 DNSs estudiados

Chart No. 2

Chart No. 2 shows the history of DNSSEC queries over IPv6. It is worth noticing that for various sampling periods there were more DNSSEC queries over IPv6 than over IPv4. Undoubtedly, the intention is for the red line to gradually decrease and for the blue line to gradually increase.

Ranking of countries with the highest DNSSEC validation rates

Using the RIPE Atlas measurement platform, it is possible to measure each country’s ability to validate DNSSEC. Each measurement can be grouped by country to create a ranking:

Ranking DNSSEC por pais

Chart No. 3

Ranking based on average DNSSEC validation rates from networks in the countries of Latin America and the Caribbean corresponding to May 2022.

The numbers inside the bars show the number of participating ASs for each country. Countries where we measured only one AS were not included.

Summary

Based on the study of traffic captures with data obtained over a period of eight months, the graph suggests a slow decrease in the number of NO-DNSSEC servers. There also appears to be greater DNSSEC deployment in IPv6 than in IPv4 servers.

It is to be expected that an analysis of the data obtained using Atlas probes suggests greater deployment of DNSSEC validation than other, more generic data sources, as these probes are usually hosted on more advanced networks or by users who would deliberately enable DNSSEC. However, it somehow represents the “upper limit” of DNSSEC penetration and is also an important indicator of its evolution over time.

Open data

As usual, we at LACNIC want to make our information available so that anyone who wishes to do so can use it in their work:

https://stats.labs.lacnic.net/DNSSEC/opendata/

https://mvuy27.labs.lacnic.net/datos/

This data is being provided in the spirit of “Time Series Data.” In other words, we are making available data collected over time, which will make it very easy for our statistics to fluctuate and to identify increases and/or drops in DNSSEC deployment by country, region, etc.

As always when we work on this type of project, we welcome suggestions for the improvement of both the implementation and the visualization of the information obtained.

References:

[1] https://stats.labs.lacnic.net/DNSSEC/dnssecstats.html

https://mvuy27.labs.lacnic.net/datos/dnssec-ranking-latest.html