By Hugo Salgado and Alejandro Acosta

Introduction and problem statement

In this document we want to discuss an existing IETF draft (a working document that may become a standard) that caught our attention. This draft involves two fascinating universes: IPv6 and DNS. It introduces some best practices for carrying DNS over IPv6.

Its title is “DNS over IPv6 Best Practices” and it can be found here.

What is the document about and what problem does it seek to solve?

The document describes an approach to how Domain Name Protocol (DNS) should be carried over IPv6 [RFC8200].

Some operational issues have been identified in carrying DNS packets over IPv6 and the draft seeks to address them.

Technical context

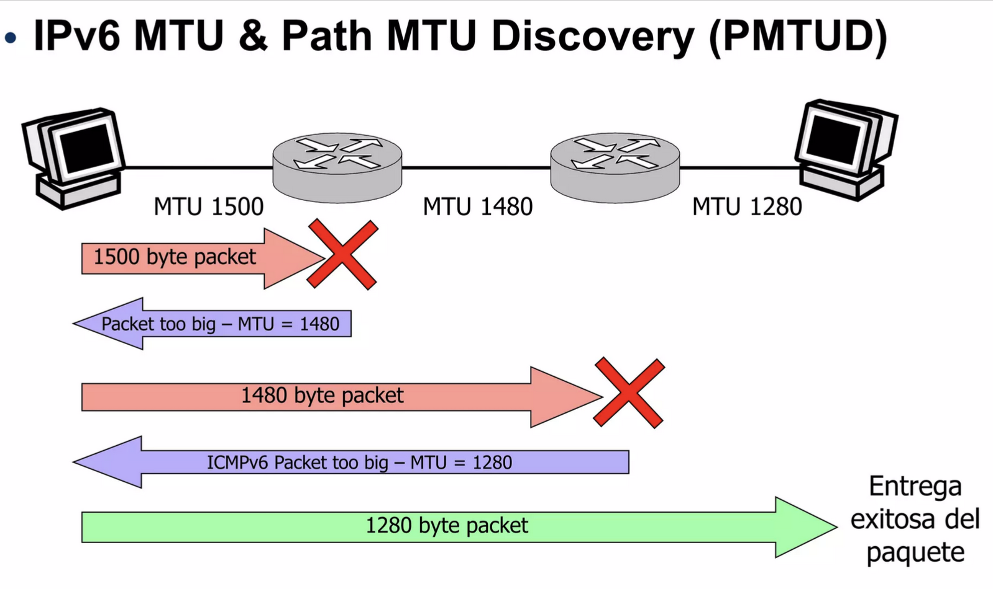

The IPv6 protocol requires a minimum link MTU of 1280 octets. According to Section 5 “Packet Size Issues” of RFC8200, every link in the Internet must have an MTU of 1280 octets or greater. If a link cannot convey a 1280-octet packet in one piece, link-specific fragmentation and reassembly must be provided at a layer below IPv6.

Successful operation of PMTUD in an example adapted to 1280-byte MTU

Image source: https://www.slideshare.net/slideshow/naveguemos-por-internet-con-ipv6/34651833#2

Using Path MTU Discovery (PMTUD) and IPv6 fragmentation (source only) allows larger packets to be sent. However, operational experience shows that sending large DNS packets over UDP over IPv6 results in high loss rates. Some studies —quite a few years old but useful for context— found that around 10% of IPv6 routers drop all IPv6 fragments, and 40% block “Packet Too Big” messages, making client negotiation impossible. (“M. de Boer, J. Bosma, “Discovering Path MTU black holes on the Internet using RIPE Atlas”)

Most modern transport protocols like TCP [TCP] and QUIC [QUIC] include packet segmentation techniques that allow them to send larger data streams over IPv6.

A bit of history

The Domain Name System (DNS) was originally defined in RFC1034 and RFC1035. It was designed to run over several different transport protocols, including UDP and TCP, and has more recently been extended to run over QUIC. These transport protocols can be run over both IPv4 and IPv6.

When DNS was designed, the size of DNS packets carried over UDP was limited to 512 bytes. If a message was longer than 512 bytes, it was truncated and the Truncation (TC) bit was set to indicate that the response was incomplete, allowing the client to retry with TCP.

With this original behavior, UDP over IPv6 did not exceed the IPv6 link MTU (maximum transmission unit), avoiding operational issues due to fragmentation. However, with the introduction of EDNS0 extensions (RFC6891), the maximum was extended to a theoretical 64KB. This caused some responses to exceed the 512-byte limit for UDP, which resulted in sizes that exceeded the Path MTU and triggered TCP connections, impacting the scalability of the DNS servers.

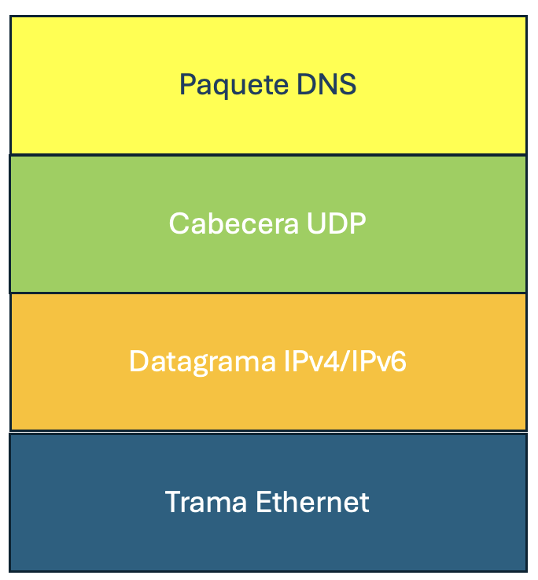

Encapsulating a DNS packet in an Ethernet frame

Let’s talk about DNS over IPv6

DNS over IPv6 is designed to run over UDP or other transport protocols like TCP or QUIC. UDP only provides for source and destination ports, a length field, and simple checksum. It is a connectionless protocol. In contrast, TCP and QUIC offer additional features such as packet segmentation, reliability, error correction, and connection state.

DNS over UDP over IPv6 is suitable for small packet sizes, but becomes less reliable with larger sizes, particularly when IPv6 datagram fragmentation is required.

On the other hand, DNS over TCP or QUIC over IPv6 work well with all packet sizes. However, running a stateful protocol such as TCP or QUIC places greater demands on the DNS server’s resources (and other equipment such as firewalls, DPIs, and IDS), which can potentially impact scalability. This may be a reasonable tradeoff for servers that need to send larger DNS response packets.

The draft’s suggestion for DNS over UDP recommends limiting the size of DNS over UDP packets over IPv6 to 1280 octets. This avoids the need for IPv6 fragmentation or Path MTU Discovery, which ensures greater reliability.

Most DNS queries and responses will fit within this packet size limit and can therefore be sent over UDP. Larger DNS packets should not be sent over UDP; instead, they should be sent over TCP or QUIC, as described in the next section.

DNS over TCP and QUIC

When larger DNS packets need to be carried, it is recommended to run DNS over TCP or QUIC. These protocols handle segmentation and reliably adjust their segment size for different link and path MTU values, which makes them much more reliable than using UDP with IPv6 fragmentation.

Section 4.2.2 of [RFC1035] describes the use of TCP for carrying DNS messages, while [RFC9250] explains how to implement DNS over QUIC to provide transport confidentiality. Additionally, operation requirements for DNS over TCP are described in [RFC9210].

Security

Switching from UDP to TCP/QUIC for large responses means that the DNS server must maintain an additional state for each query received over TCP/QUIC. This will consume additional resources on the servers and affect the scalability of the DNS system. This situation may also leave the servers vulnerable to Denial of Service (DoS) attacks.

Is this the correct solution?

While we believe this solution will bring many benefits to the IPv6 and DNS ecosystem, it is a temporary operational fix and does not solve the root problem.

We believe the correct solution is ensuring that source fragmentation works, that PMTUD is not broken along the way, and that security devices allow fragmentation headers. This requires changes across various Internet actors, which may take a long time, but that doesn’t mean that we should abandon our efforts or stop educating others about the importance of doing the right thing.